An In-depth look at CAST 2015

The Conference for the Association for Software Testing or CAST 2015 was held in Grand Rapids, MI during the first week of August. Since then I’ve been trying to reflect on what I thought, learned, liked and didn’t like. Here is that reflection in roughly 3,000 words. To speed the reading process I’ve created a table of contents.

tl;dr – overall CAST was great and I walked away with a lot to think about and apply to my job.

Page Contents

Speaking Truth to Power: Delivering Difficult Messages by Fiona Charles

When signing up for CAST, you have the option of joining a full day tutorial, the day before the conference. I signed up for Fiona Charles’ tutorial “Speaking Truth to Power: Delivering Difficult Messages” because it sounded interesting and I’d heard good things of Fiona’s workshops.

Tutorial Day

The first 10 or so minutes was a brief overview of our eventual workshop and a brief set of slides that were also given as a handout. The twenty or so of us sat in a giant circle, all facing each other in what otherwise could be described as an empty conference room. A large post-it board sat on an easel just outside the circle for capturing the important lessons of the day. Soon our large circle broke apart and we formed into small groups to brainstorm a situations we could describe where someone might have to deliver a difficult message. (Sounds easier than it really is.) Each group then wrote those messages on large easel-pad post-its and put them up on the wall for a vote.

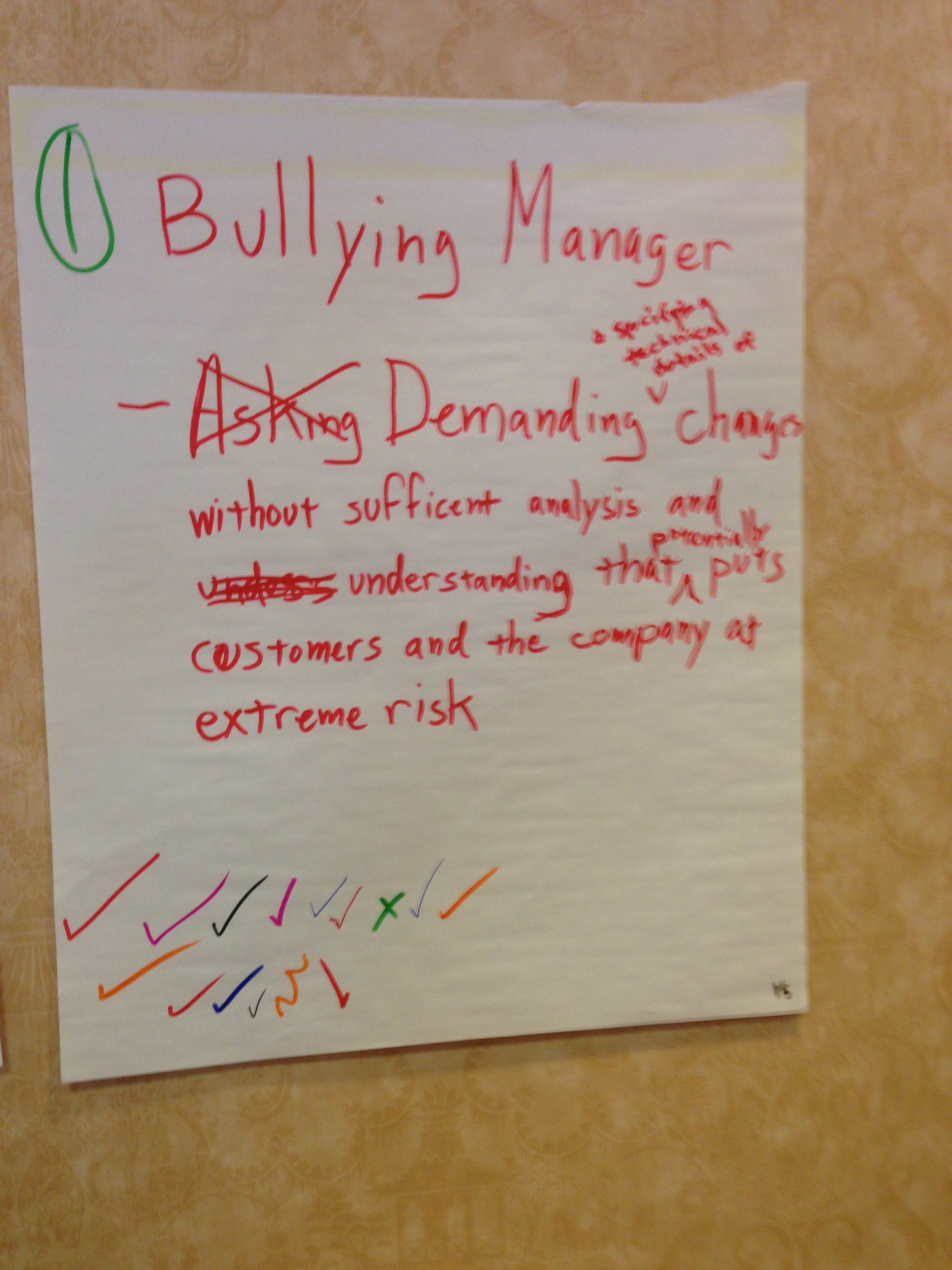

Our idea ended up the third best number of votes. In first place was a scenario called the “Bullying Manager” and our team volunteered to be the first to re-enact the scenario. I was to address a bullying manager. The scenario went something like: some manager was demanding specific and technical details of changes without sufficient analysis and understanding that potentially put customers and the company at extreme risk.

Further elaboration revealed this was a real world situation someone experienced. (Hopefully I get this description somewhat right). Some bug made it into production and the team who was responsible for the problem started trying to figure out how to fix it. At some point an executive became concerned and sent in a “special forces” team to figure it out and fix the problem for good. When this new team arrived, the new manager assumed control and became very demanding. This new manager would stare over the teams shoulder as they would work – micromanaging every decision. Then this manager started demanding changes to production – as in changing a specific values in the code – based on some non-existing or faulty assumption. The original team located the issue, but this new manager wasn’t interested in what their solution was, only what his solution was. One of the original team members decided to talk to this manager.

I’m usually not one to shy away from delivering unwelcome news or any news for that matter. I’m fairly comfortable speaking in front of others, including people who might be higher up in the chain of command. I remember one of my former University teachers saying “they’re just people” as in, no matter what position or tittle or fame someone might hold, they’re just people and once you understand that it makes it a lot easier to have a conversation with someone of stature.

I volunteered to be the team member who talked to the boss. As our team and I attempted to role-play this scenario in front of the room it went… ok. Eventually our lack of knowledge of the situation and our / my general unpreparedness in dealing with an unwilling manager lead this discussion into an eventual stalemate. I suppose that’s when I really started learning something.

The group went through a number of scenarios like this and as we went along it lead to some brainstorming of lessons or take-aways. Here’s a selection of those notes as I was able to record them:

- Don’t ambush

- Prepare your info

- Share it (have a paper trail)

- Know what you want to achieve in delivering a message

- In order not to ambush, set up a day and time to talk.

- Having an open area in your calendar is a good start

- If in open spaces set clear boundaries about where you have the talks

- Talk to the right person

- Be clear about what you are asking for

- Don’t blame anyone

- Come with a solution to the problem

- How can we avoid these types of conversations?

- Communicate early and often

- Build relationships

- Talk to non-testers about testing

- Advocate for the right amount of quality

- Build credibility.

After the tutorial ended it wasn’t immediately obvious what my take-aways were, what I had learned. I walked away processing a lot of information. Addressing the fallout of bad news isn’t something we really considered and I wish we had. Looking back over the above list I think my group and I could have improved in a number of ways. Frankly I think we ambushed the manager. Then we didn’t give manager a way to save face. Even if our solution to the problem was right and his was wrong, we probably could have given the manager the details of both of our plans and let him come to the conclusion on his own that our solution was best. Given his “status” it probably would have made sense for him to take all the credit and for our team just to be happy this would allow him to eventually leave.

Thanks for the tutorial Fiona, it was a thinker!

Tester Games

Despite this being my first attendance at CAST, I always catch either the live broadcasts or eventual recordings on YouTube. I can’t believe more conferences don’t do this! Sharing talks online is such a great way to disseminate information and at least for CAST that really only exists because of the hard work of a few people, especially Ben Yaroch.

If you’ve watched a CAST videos then you’ve probably seen at least one CAST Live video where Ben Yaroch and Dee Ann Pizzica interview a select number of attendees. Everything had to be up and running for Day 1 so “testing” of the setup happened tutorial day after everyone was done. I happened to stumble past Ben and Dee Ann and they invited a few of the attendees over to play a game or two of Catch Phrase (Testing Edition) while they recorded it.

Scott Barber and I paired up for the “bonus round” of Catch Phrase (Testing Edition). Check it out:

(For fun try watching the video on mute with only the closed captioning on. See if you can understand what we are saying.)

Day 1

Day 1 started with Karen Johnson’s very personal keynote which looked at how she kept moving forward in testing. Moving on from different roles, full-time positions to consulting and back now to a full-time role. Karen talked about trying to maintain a work / life balance, admitted she wasn’t strong in math and although some might consider that to be a weakness, she didn’t let it hold her back. I highly recommend checking out Karen’s tutorial and for that matter the whole play list of CAST 2015 videos.

Building A Culture of Quality, A Real World Example

If I had to sum-up this session I would explain it as Josh Meier’s experience reports working in testing for Amazon and now Salesforce. [slides]

Josh started by sharing that he’s now a quality manager / architect at Salesforce but previously worked as a tester for Amazon. With all of the companies he’s worked for he’s tried to build and support a culture that promotes quality. In order to build that culture it helps to understand what both “culture” and “quality” mean. Typically a culture is comprised of the environment, behaviors, shared values & attitudes, assumptions and generally the way we do things. Quality is subjective but it helps when you can articulate a definition and that’s what Josh has done:

[highlight]A system that meets the needs of the user, is intuitive and improves on the previous release.[/highlight]

Early in his career at Amazon and they had these very silo-ed development teams where programmers would throw code over to the QA department and vise versa and it created this uncooperative culture. He decided to attend the scrum meetings, found the one programmer that was interested in pair programming / testing and started helping. He participated in code reviews; at first mostly watching and listening to what the programmers were doing. Over time he learned and became comfortable enough to ask questions and started providing input. With that effort, over time his reputation and credibility grew and he was eventually able to get early access to features (and access for his team mates).

Now at SalesForce, the company employs testers as “Quality Engineers”, although they focus heavily on automation. Over the years they have built out millions of automated checks that can take several weeks to run through all of them. The only problem is less than one percent of check-ins brake these tests, suggesting the value of running all these tests is low. In order to get higher value out of their testing efforts he’s trying to bring more exploratory testing into the company and pair people up like Pivotal has done.

At some point during the session Josh mentioned some examples that could help improve quality:

- Part of building the culture means having dashboards or tools that provide visual information

- Make sure engineers understand how software gets to production (its surprising how in a large organization this isn’t always the case)

- Make sure engineers participate in dealing with customer complaints

- Engineers can do front-line support

- “Pager duty” (or getting calls when things break)

- Showing programmers how to write checkable code

- Testing the non-happy path

- Testers partnering with programmers and vice versa

- Testers participate in code reviews

- Co-located teams or at the very least testing integrated into the development teams

- Shared accountability

My main take-away from this talk was how quality was essentially a people problem and to improve quality you have to start one person at a time. Building trust and credibility came as he focused on each person and problem. Eventually others started seeing his progress and his quality initiatives picked up steam and encompassed more teams.

Thank to Josh for hanging out and allowing me to pick his brain on a number of topics. His insight was mostly helpful.

Should Tester’s Code?

This was a debate between Henrik Andersson and Jeffrey Morgan that got a lot of attention and a big attendance but really wasn’t all the useful to me. While I appreciate the two gentlemen going through the exercise and trying to make a debate about it, there were a lot of false dichotomies. At the end of the session the conclusion among the few people I was chatting with was this debate might have been better as an experience report between three different sets of individuals:

- A person who succeeded in testing (or software development) without programming skills (but good testing skills) – Extreme #1

- A person who succeeded in testing (or software development) with decent programming skills and good testing skills – Middle

- A person who succeeded in testing (or software development) with good programming skills (but good testing skills) – Extreme #2

This might have led to more discovery among the positions, their similarities and differences, and probably would have given the participants a better idea of the diverging roads to success in the industry. (Not necessarily sure how you’d define success in this circumstance.)

In the end this wildly popular (in terms of attendance) session did little for me. I suppose it helped me understand what I consider useful for a debate.

Bad Metric, Bad

Joseph Ours gave this great session on the variety and validity of measurements and metrics. In fact it was so good that I ended up spending my time really engaged and not taking a lot of notes or tweeting. Here are some of the highlights from his session:

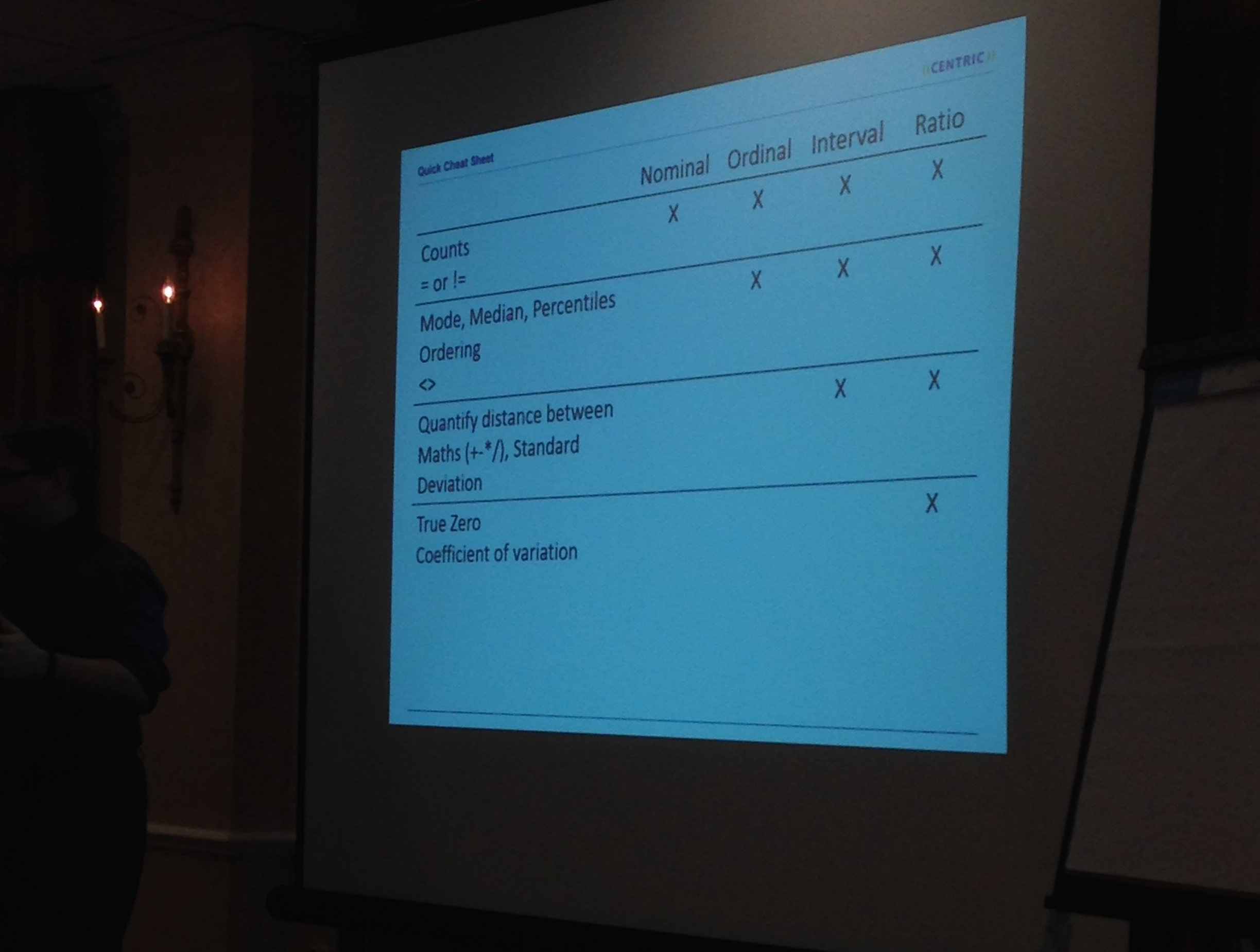

There are 4 levels of measurement:

- Nominal – Name, Functional Area. Can’t be ordered.

- Ordinal – Ranked data like priority and severity. Can be ordered.

- Interval – Distance is meaningful and consistent. Time, Days,

- Ratio – Same as interval but with a clear 0 point.

When we use metrics we need to be careful about violating the rules and levels of measurement. We also need to be careful about what meaning they provide. If you attempt to count data that is nominal like a name or a test case, you aren’t getting anything other than that label. Those labels aren’t comparable; you can’t measure the distance between one another and you certainly aren’t considering the contents, context or the meaning behind them.

Things you *should* do with metrics #CAST2015 @justjoehere pic.twitter.com/kQcR9mAuc8

— Josh Meier (@moshjeier) August 4, 2015

One of the main take aways I got from this session was, generally speaking, metrics are best when they are tied to organizational goals. That way the metrics are easier justify, its more obvious when they stop being effective and they are less likely to hurt the organization once they are gamed.

The information from this session was useful, although I think if we had more time for exercises and if there were handouts of the slides and some of the talking points, it would have been better. In fact as I’m writing this I wish I had retained more information from this talk. Presentation was good as was the information but there was so much it information it was hard to absorb in the time the session allowed.

End of Day

After all the sessions ended there was still more to do. I listened to a few of the self-organized Lightning Talks that followed. I think the way lightning talks worked is there were 5 minutes in length with another 5 minutes for conferring, questions, etc. None of them were recorded but there was a lot of good stuff going on. I think I stayed to listen to maybe 5 of them but I think they went quite a long time.

#CAST2015 Lightning talks by other #testers. Topical, open mic, 5-min rounds. So cool. #learn #test #repeat pic.twitter.com/1rp6xb9aAC

— Connor Roberts (@ConnorRoberts) August 4, 2015

Day 2

Ajay’s Keynote on the Future of Testing already being here was full of great resources for people to learn and become better testers. Perhaps the most intriguing idea that still resonates with me today is his take on the schools of software testing and how there are really only two:

- Where testing skills are seen as important

- Where they are not or no one cares

There’s a lot more to Ajay’s keynote and since it’s online you can check it out for yourself. The future is here.

"Boss" table at #cast2015 pic.twitter.com/h31BDcv6xj

— mheusser (@mheusser) August 5, 2015

Reason and Argument for Testers

Telling somebody their argument is invalid comes across as "you're bad." Instead, try "I think we can find a problem in reasoning" #CAST2015

— Josh Meier (@moshjeier) August 5, 2015

Scott Allman and Thomas Vaniotis’s great but information-heavy (almost information overload) talk on how to reason and understand an argument. This seemed like one of the more practical and insightful sessions because the point seemed salient – learn how to decompose something someone says or writes in order to understand where the argument and rhetoric are coming from. (At least that’s what I think it was about.)

Some topics covered included being able to expose fact and logic to understand and scrutinize an argument, how to differentiate an argument and rhetoric, truth vs. non-truth valued sentences, syllogisms, soundness, fallacies, argument analysis and many more things that I didn’t have time to write down. In fact I think the only downside to this session was it didn’t have enough time to cover everything they wanted – that and it would have been nice to have the slides and maybe enough time to go through a few exercises in groups so we could see how to try this in action.

Luckily doing a Google search for terms like argument analysis or syllogisms turn up quite a few awesome references.

A common rubric for testers is to examine whether premises in arguments about software behavior are true. – Scott Allman #CAST2015

— Clint Hoagland (@vsComputer) August 5, 2015

From Velcro to Velocity: A Hands On Tactile TDD Workshop

In my experience Rob Sabourin’s workshops are usually pretty fun and the topic of Test Driven Development seemed relevant to my recent interests. The workshop started with a brief lecture and over view on all the different types of “driven” approaches to testing and development including:

- ADT – Analytics Driven Testing

- ATDD – Acceptance Test Driven Development

- AWDT – Action Word Driven Testing (also called keyword driven testing)

- BDD – Behavior Driven Development

- MDT – Model Driven Testing

- TDD – Test Driven Development. A programming approach

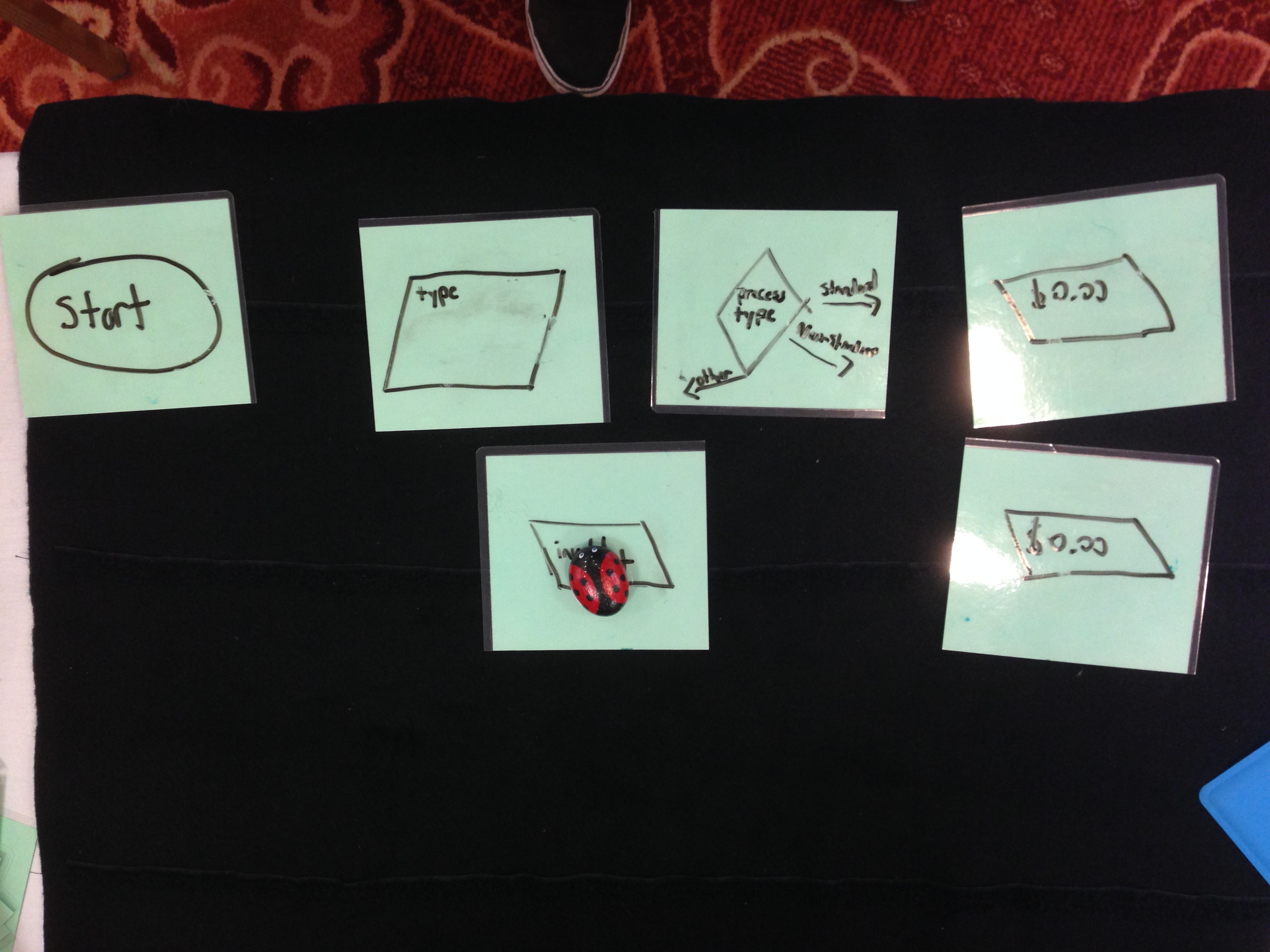

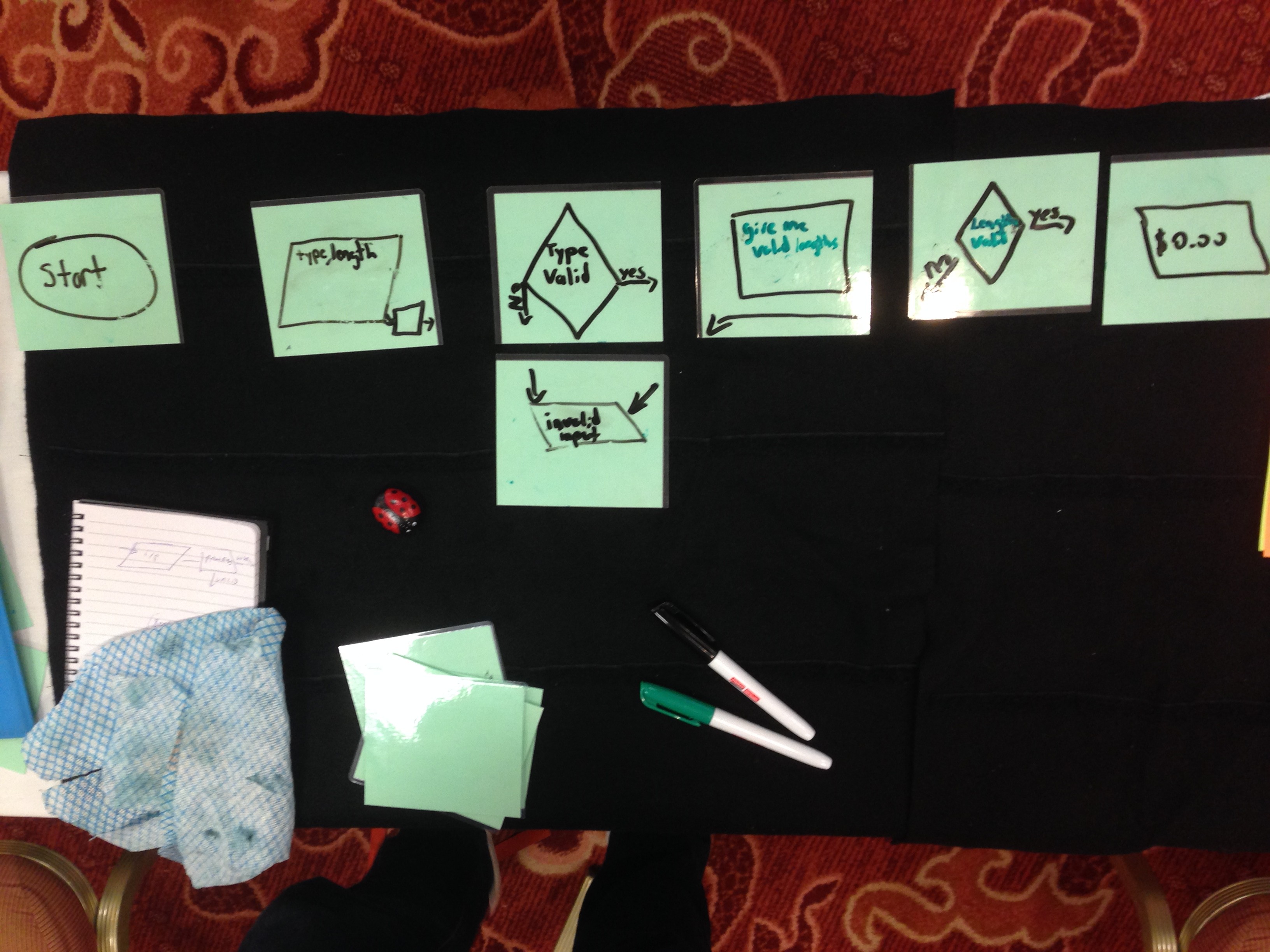

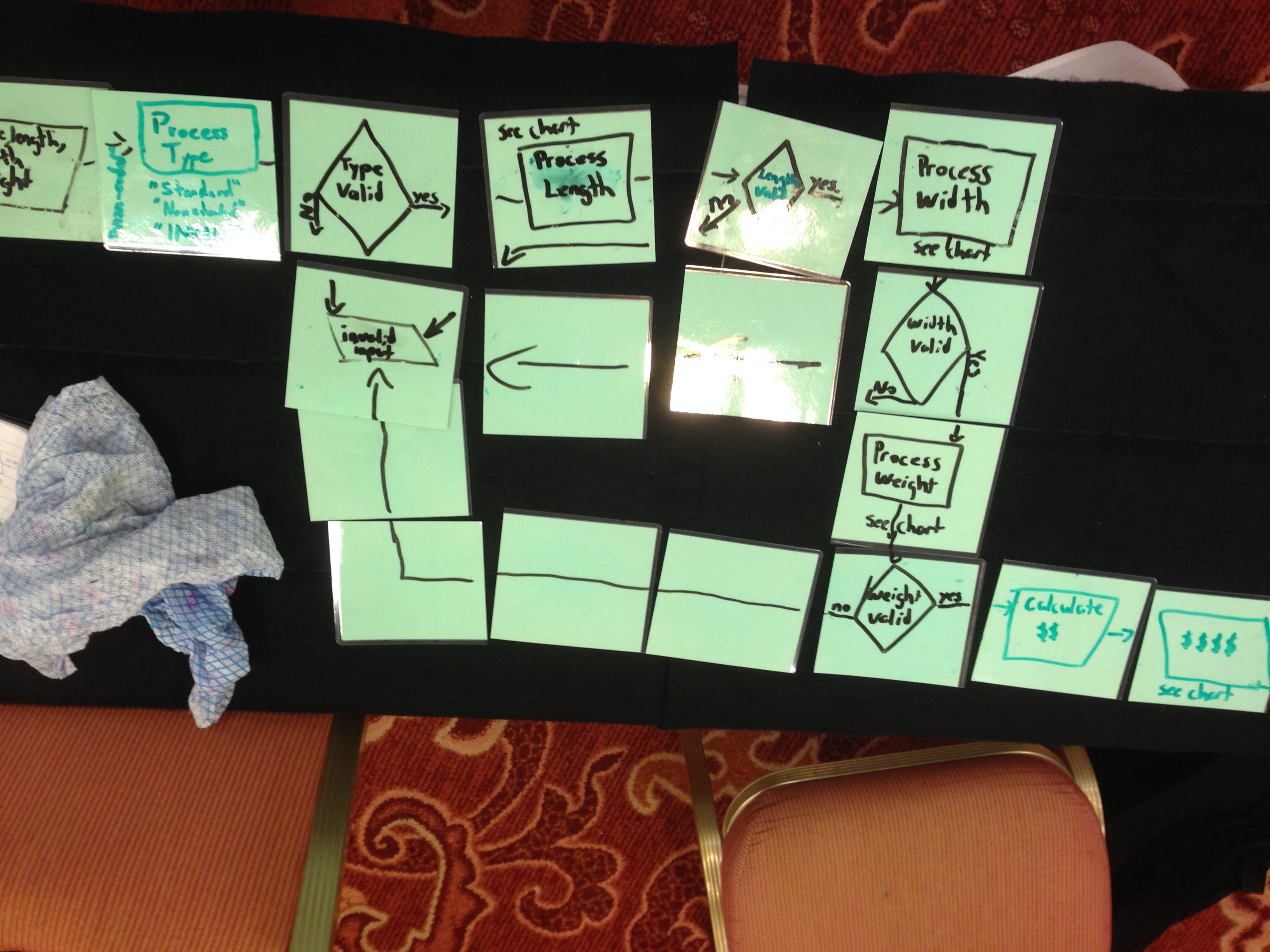

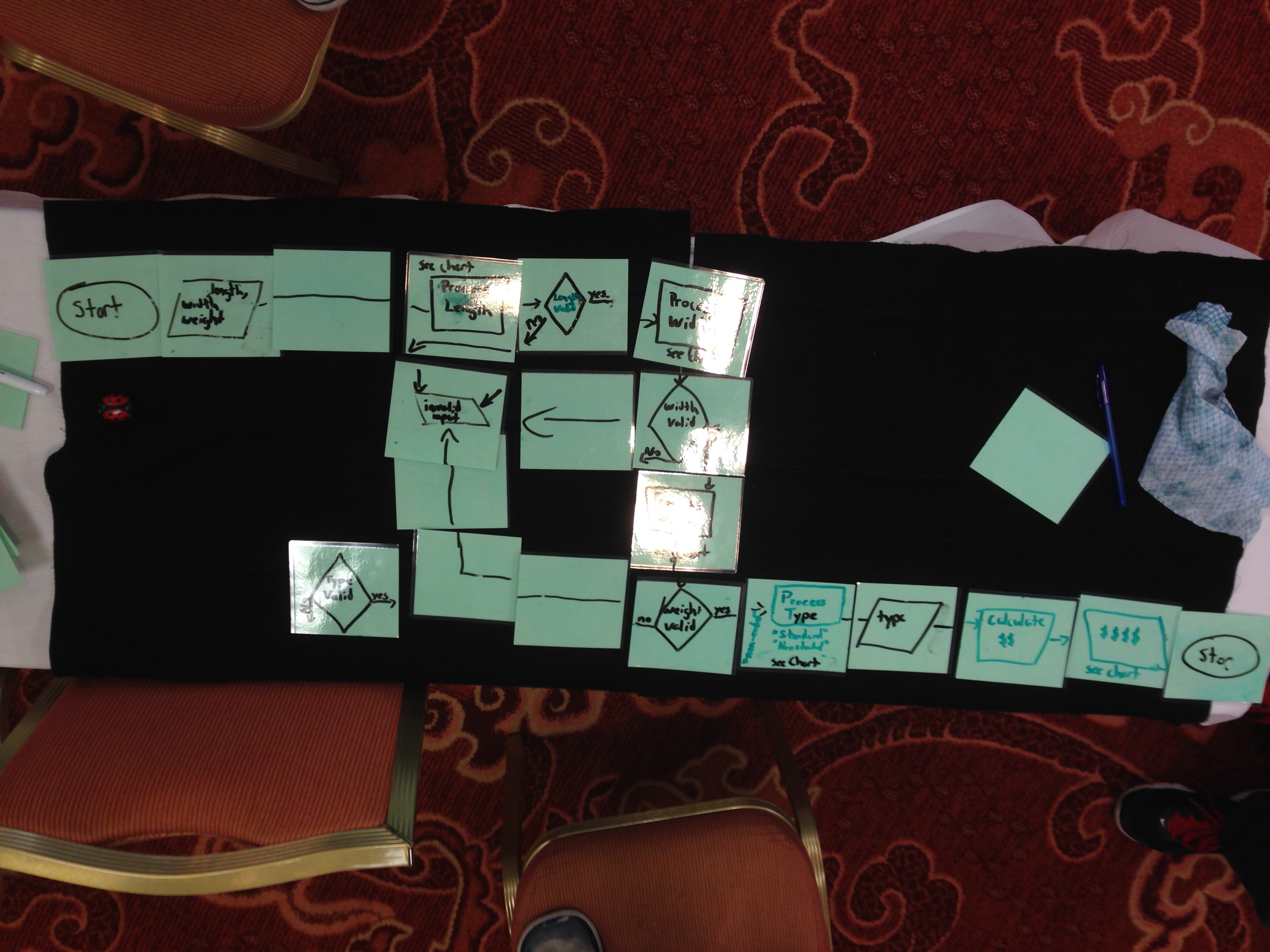

Then we moved on to an overview of our assignment. We would be developing a program, or at least the logic for a program represented as a flow chart. Yet in order to build our program we needed to design our tests ahead of time. Once we felt we had built our tests we were allowed to start “coding” up the flow chart but we weren’t supposed to build out any part of the flow chart we didn’t have test coverage for.

I don’t want to give too much away about the requirements but the team I was with (Dwayne, Josh and Seshank), our official name was “flowchart aficionados” produced the following results:

We had some fun, made a visual, thought a lot about what tests we could have / should have created and how we misunderstood the requirements. Along the way we even found a bug or two in the requirements.

Following up I definitely need to contact Rob and get his slides. I’m even more interested in his conclusions and references with regard to the differences between the above “driven” approaches to testing and development.

Exploratory Testing Cloud Foundry

"Sprint has always seemed like a weird word for something that's supposed to be done at a sustainable pace" #CAST2015 @JesseTAlford

— Josh Meier (@moshjeier) August 5, 2015

I think that’s the title Jesse ended up with; it differed from the planned title. Mistakenly or on purpose I kept running into Jesse and picking his brain on a number of subjects that were of interest to me. In fact he did this great lightning talk specifically on pairing with developers on Day 1.

Jesse was brought in to Pivotal Software by Elizabeth Hendrickson because of his testing skills and now works on Cloud Foundry. I forget how Jesse explained this but Pivotal’s engineering culture is unique, I think they do a lot of pairing both coding and testing; engineers do the unit and integration testing based on a prioritized backlog.

He covered a lot of interesting aspects of how they work at Pivotal:

- Pairing with programmers, project managers, etc. to explore a product.

- Putting exploratory charters in the backlog of whatever project tracker you use to get input on priority

- Charters are used as a general-purpose scaffold to help learn about the product and are used to target known unknowns

- They sometimes use time boxing for charters found it’s not necessary if you have tight or narrow objectives or limited resources

I think Michael Larsen wrote more in his blog post, I could hear him typing away as he live blogged the whole session!

I might be finding out that I am the "pairing and experiential exercise" guy, based on the things I keep finding myself saying. #CAST2015

— Jesse Thomas Alford (@JesseTAlford) August 4, 2015

Final Thoughts

As good as conferences are for networking there’s a lot of informal information that get’s passed around. I had some great hallway chats with so many different people and without naming names I’d like to thank everyone who added to a discussion or lent their expertise for one of my questions. The discussions around AST like its history, operations, teaching, board positions, etc. from existing the board members was quite fascinating as well.

I’ve read so many other experience reports from CAST as they were published in a more timely fashion than this one and it’s been helpful to get those other perspectives. There was definitely a point that I hit the exhaustion threshold and as much as I wanted to go home and relax I didn’t because I knew I’d miss something useful.

Looking forward, I’d like to consider writing a paper and doing a talk for CAST 2016, where ever it ends up being. I have no idea what it will be on but I’m sure I can come up with something interesting. Same goes with the lightning talks. Until then!

#CAST2015 Shirt (Front) @mheusser @dynamoben @mikelyles @DawnMHaynes @carlshaulis @ckenst @FionaCCharles @ajay184f pic.twitter.com/O8oAsyJkIB

— Perze Ababa (@perze) August 8, 2015

Additional References

- Remembering CAST by Perze Ababa

- CAST is over, now what by Josh Meier

- CAST 2015 Quick Summary by Erik Brickarp

- One of my tutorial group members, Steve, wrote a review on the tutorial and CAST: